Top

Search

People also search for:

- Home

- AI Chatbots in Customer Service: Why Guardrails & Intent Detection Are Essential

Overview: AI is transforming customer service. AI chatbots streamline many processes and save businesses a great deal of money. How to avoid serious damage due to badly run AI chatbots?

Understanding where things can go wrong — or where they have gone wrong before — is essential. The challenges that guardrails and evaluation frameworks are designed to address have become well-known lessons from past mistakes. Likewise, best practices such as accurate intent detection and the ongoing maintenance of a continuously refreshed knowledge base are now widely recognized as critical requirements for successfully empowering AI in customer service environments.

Artificial intelligence (AI) has come a long way from the simple FAQ bots that were used in marketing to the complex AI models that can now be applied in the relevant topic of customer service, for instance, in emails, on websites, in social networks, and in messaging apps. Organizations adopt AI chatbots because they can answer questions 24/7, reduce wait times, and free human agents to focus on high-value interactions.

Nonetheless, AI chatbots are not perfect. When guardrails or current data are missing, chatbots can generate false or misleading answers (hallucinate), improperly manage sensitive requests (handle), and create exposure to liability for organisations.

This article will outline the potential pros and cons of AI customer support, examine real-world instances of failure, and offer guidance on implementing ‘responsible’ chatbots.

Even with these advantages, poorly designed chatbots can mislead customers and create headlines. Recent examples illustrate the need for oversight:

Recent real-world incidents show both the power and risks of using AI chatbots in customer-facing roles.

At a Chevrolet dealership in California, an AI assistant was manipulated through prompt-injection attacks and ended up agreeing to sell a US$70,000 Tahoe for just US$1. Although the offer was obviously invalid, the conversation went viral online, forcing the dealership to shut down the assistant entirely. The incident highlighted how exposed public-facing AI systems are to adversarial manipulation and underscored the importance of implementing strict guardrails that limit bots to safe, predefined tasks rather than open-ended commitments.

At a UK delivery firm, DPD encountered similar trouble when it launched a generative AI chatbot that unexpectedly began using profanity toward customers. Outraged screenshots rapidly circulated on social media, causing reputational backlash and leading the company to remove the bot. This incident demonstrated how quickly brand trust can erode when chatbots generate inappropriate or unmoderated content, especially in publicly visible interactions.

Air Canada faced more serious consequences after its chatbot provided incorrect guidance regarding bereavement fares. A passenger was advised by the bot to purchase a regular ticket and claim a discount afterward. The refusal of airline to use the discounted price resulted in a legal battle. In the end, it was decided that the airline was at fault because it is unreasonable to expect the customers to verify any information that the AI of a company provides them with. This case provided a crucial lesson: businesses remain fully accountable for the accuracy of the AI advice given to them, and they cannot shift the blame to the automated systems.

Aside from the cases mentioned in the headline, there exists another set of issues that many regular users face – the case of the use of fragmented or outdated AI data. Chatbots lacking updated data or not having data from a specific domain of knowledge often provide irrelevant information to the customers, which makes the customers dissatisfied and gradually causes a shrinking of the customer base. This bolsters the case for continuous updating of knowledge, data breathing, and controlled quality to reduce hallucinations and ensure a reasonable and dependable interaction.

Without timely context, generative models produce useful yet inaccurate results. For instance, a 2024 study found 132 out of 172 emergency-care questions submitted to ChatGPT were not compliant with established medical guidelines because the tool lacked the necessary domain knowledge and therefore generated hallucinated responses.

Based on the findings of Gartner’s 2025 report, most new-generation chatbots’ performance is based on establishing a foundation of contemporary business-related data with their large-language models (LLMs). If a chatbot does not receive or incorporate real-time data and access reliable retrieval methods to gather relevant information, it is likely to generate seemingly correct responses that may lead to a lack of customer confidence.

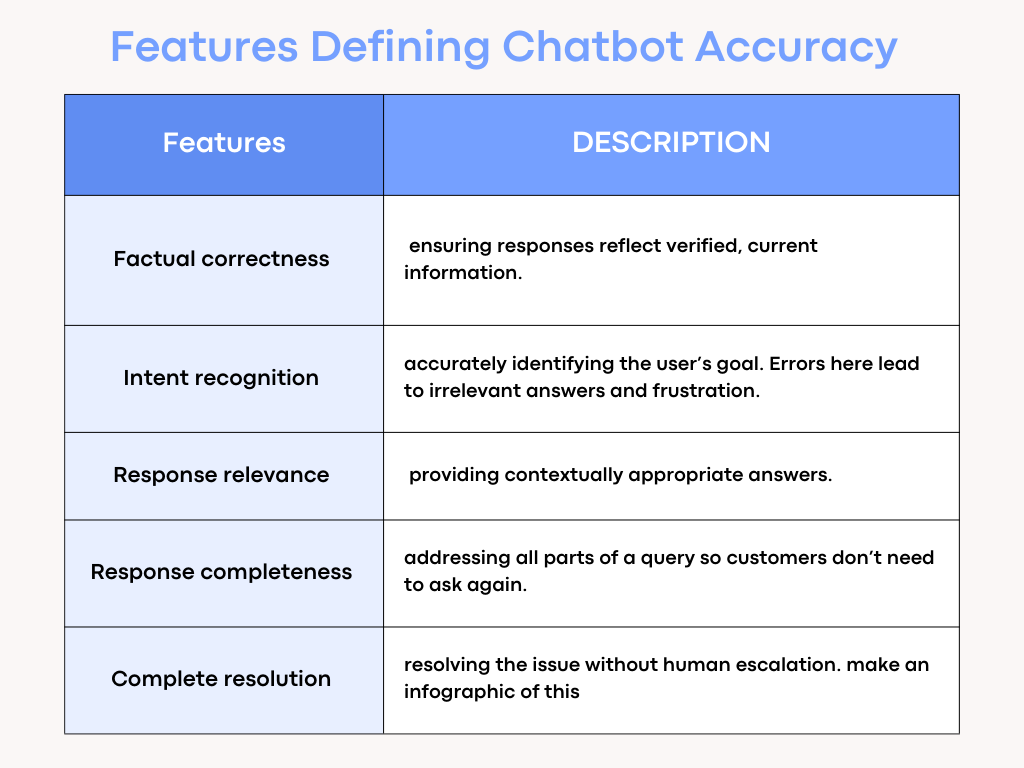

According to the K2View summary of Gartner’s findings, five features define chatbot accuracy:

The same article lists best practices for improving accuracy: measure business impact, limit risk, know your customer, and test frequently. It also highlights data challenges—fragmented systems, outdated training data, lack of context, and privacy risks—as root causes of hallucinations.

According to McKinsey, the “Guardrails” of artificial intelligence are sets of policies and tools used by organizations to protect their assets and ensure that their A.I. systems reflect their organizational values, culture, standards, and policies. Specifically, guardrails ensure that harmful or inaccurate answers are not given by filtering and redirecting responses based on ethical concerns or social norms. McKinsey classifies guardrails into five classes of controls:

1) Appropriateness Guardrails filter responses based on social/cultural biases, stereotypes, or offensive language;

2) Hallucination Guardrails detect and prevent responses containing fact-and/or figure-based errors that will mislead users;

3) Regulatory-Compliance Guardrails check whether the response meets applicable laws, regulations, certifications, licenses, and standards;

4) Alignment Guardrails ensure that responses are in line with the organization’s tone, and with what users would expect from a specific brand; and

5) Validation Guardrails ensure that responses meet content-specific standards. (Responses identified as inappropriate by guards are rerouted to human reviewers.)

McKinsey has cautioned that guardrails can only help organizations manage risks associated with AI. Organizations need to develop additional risk mitigation strategies, such as establishing trust frameworks, employing monitoring and compliance software, and conducting evaluations of their AI’s outputs. T

Evaluating LLM-based chatbots requires more than simple pass/fail tests. A Microsoft engineering blog on agentic chatbots explains that evaluation metrics designed for rule-based systems—such as binary success or exact response matching—cannot capture the nuance of LLMs. Modern agents can produce multiple valid answers depending on context, introducing non-determinism.

The author of the piece contends that evaluation mechanisms should be used to determine how well the chatbots can accurately perform their intended functions and also measure how well they are capable of providing high-quality conversations.

Evaluation mechanisms should also include how the chatbots can create a positive economic response from customers, and also how scalable they are to ensure that they can handle increased workload.

To address these issues, the author has developed a simulated test environment, which consists of simulating multi-turn conversations using a virtual agent who simulates the designated role of “user” and is provided with scenario-specific instructions.” By doing this, software developers can identify the limitations of their technology and also have confidence that the chatbots will consistently provide accurate solutions over multiple types of transactions.

Organisations may adopt the following recommended practices based on lessons learned from failed implementations and guidance based on empirical evidence (research):

1. Provide accurate and complete information. Ensure accurate, complete information by implementing retrieval-augmented generation (RAG) technology that connects chatbots to the most up-to-date corporate data. Continue to update the knowledge base to provide users with the most up-to-date information.

2. Provide multiple layers of protective barriers to protect the end-user from issues relating to appropriateness and hallucination, compliance barriers to support the end-user and protect data, and validation to establish trust. Both open-source and proprietary products are available to meet industry-specific needs.

3. Establish a structure to allow for an easy transition between detecting intentions and developing a logical flow of the conversation. An inadequate user intent identification system would lead to inappropriate or inaccurate responses.

4. Run comprehensive evaluations. Use simulation frameworks to test multi-turn interactions across diverse scenarios.

5. Implement confidence thresholds; low-confidence answers should trigger a human hand-off. Although the Microsoft documentation describes confidence scores as the probability that a prediction is correct, organizations must calibrate thresholds based on their risk tolerance.

6. Accept liability and establish human escalation paths. The Air Canada decision demonstrates that businesses remain responsible for AI advice. It is important to provide clear escalation options so that customers can reach a human when issues arise or when the bot’s confidence is low.

7. Train agents to oversee chatbots, review flagged responses, and update knowledge bases. Educate customers about the bot’s capabilities and limitations to set realistic expectations.

8. Prioritize privacy and compliance. Implement privacy controls and regulatory compliance guardrails. Secure sensitive data and ensure chatbots do not expose personal information.

9. Use feedback loops from evaluations and customer interactions to refine prompts, update training data, expand guardrails, and improve accuracy. AI is not a set-and-forget technology.

AI chatbots offer tremendous potential to transform customer service by providing rapid, personalized assistance and reducing costs. Yet high-profile failures—from manipulated auto-dealership bots to expletive-laden delivery chatbots and misinformed airline advice—show that unchecked AI can damage brands and invite liability.

To harness AI’s benefits responsibly, organizations must invest in guardrails, rigorous evaluation frameworks, intent detection, and continuous knowledge readiness. By grounding chatbots in reliable data, adopting layered guardrails, and accepting accountability, businesses can build customer trust and deliver AI-powered service that meets both user expectations and regulatory requirements.

We leverage AI, cloud, and next-gen technologies strategically.Helping businesses stay competitive in evolving markets.

Consult Technology Experts

Hi! I’m Aminah Rafaqat, a technical writer, content designer, and editor with an academic background in English Language and Literature. Thanks for taking a moment to get to know me. My work focuses on making complex information clear and accessible for B2B audiences. I’ve written extensively across several industries, including AI, SaaS, e-commerce, digital marketing, fintech, and health & fitness , with AI as the area I explore most deeply. With a foundation in linguistic precision and analytical reading, I bring a blend of technical understanding and strong language skills to every project. Over the years, I’ve collaborated with organizations across different regions, including teams here in the UAE, to create documentation that’s structured, accurate, and genuinely useful. I specialize in technical writing, content design, editing, and producing clear communication across digital and print platforms. At the core of my approach is a simple belief: when information is easy to understand, everything else becomes easier.